Pigeon 3D Tracking

3D-POP Dataset

Using a large motion tracking system and pigeons with attached head and backpack markers, we tracked the fine-scale position and orientation of pigeon individuals. By finding the relative position of markers to keypoints of interest (e.g eyes, beak) by annotating 5-10 frames, we propagated keypoints using motion tracking information, allowing us to produce annotations for bounding box, individual trajectories, 2D and 3D keypoints for groups of 1, 2,5 and 10 pigeons.

3D-Muppet: 3D Multi-Pigeon Pose Estimation and Tracking

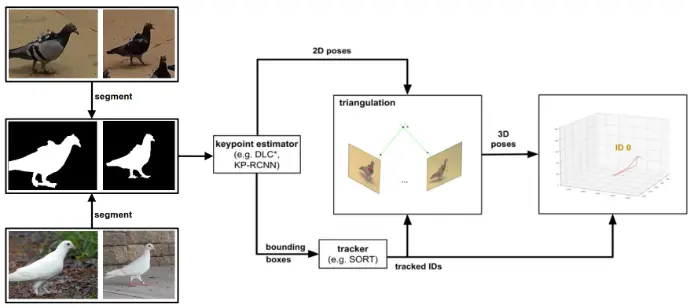

Using the 3D-POP dataset, we trained 2D keypoint detectors and triangulated 2D postures into 3D. The approach worked well and

yielded high accuracy (median 6.9mm keypoint error). We also show that training

a model with single pigeon data also works with multiple pigeons, for future

biologists who can potentially avoid annotating multi-animal data, which is

much more labour intensive than labelling single individuals.

Pigeons Everywhere: 2D postures of pigeons in all environments

Finally, we show that a model trained using data collected in captivity (3D-POP) can also work in pigeons in the wild, allowing 2D and 3D posture estimation of multiple individuals in the field. Using the segment anything model, we trained a 2D keypoint detection model of masked pigeons to remove the influence of the background. During inference, we first used a pre-trained MaskRCNN model to get pigeon masks, and managed to get 2D keypoint detection without additional annotations for training.

Texture Independent Framework

Bachelor student Valentin Schmuker (supervised by Urs Waldmann) extended the 3D-MuPPET framework to be texture independent. Using SAM, Valentin first created silhouettes from 3D-POP, then trained DLC to predict keypoints based on the mask. Results seem promising and can also generalize to other species!